This is the first of many blog posts I assume I will end up writing in the future. Because hey, we learn everyday! Today’s edition is all about technical things I’ve learned recently as part of my new rotation in Platform Services.

Now, I don’t usually write about my rotations until the very end of the rotation, but in this team in particular, I finish every week with so much information in my head that I want to not only share but also helps me digest it all.

💡 Addressing the gap in my knowledge

Before joining this team around 6 weeks ago, I had a huge gap in my technical knowledge around infrastructure and the magical computers in the sky. The extent that I knew was around the basics of website hosting, buying a domain name and linking it to a website and bits of file transfer protocol (FTP – omg, remember those days?!) I knew all of this from just playing around back when I was little.

Technology has moved on at lightning speed since the days where my world view of technology was me building a simple website and blog on my family’s computer. There are now, as I’m learning, more stuff I don’t know that I don’t know.

📒 Scribbles from my notebook

All these are some of my notes on my understanding on the different technologies I use now at work. This is how I understand them and some will be overly simplified (mostly for my benefit, but also anyone interested in diving into these new technologies!) Please feel free to comment on any mistakes I’ve made. We are always learning 😊

Cloud Computing

The term Cloud Computing sounds like a buzzword that people just throw around. As a newbie, this was a concept I found difficult to get my head around. But after learning from my team, AWS Newbies and A Cloud Guru, I feel like I have a better grasp of it as well as the benefits over traditional systems (mainly cost, efficiency and scalability.) The definition that makes the most sense to me and one that I refer back to often is:

“Cloud Computing is an on-demand delivery of compute, database storage, application, IT resources. This refers to things like memory, storage, CPU, databases, servers etc. – basically whatever makes up a computer.”

Although it is called Cloud Computing, everything that is “spun up” live in physical data centres across the world. It is called Cloud because you don’t have to manually manage a physical server, that is someone else’s responsibility, in this case… AWS.

Amazon Web Services (AWS)

One of the biggest Cloud Computing provider is Amazon Web Services, offering over 165 fully featured services from data centres globally.

I created a free tier account to play around with and am always amazed with all the things that you can do! The most exciting project I’ve done myself is create a React app with continuous integration and delivery pipeline so that when you update the code and build it, it is updated automatically and anyone with the URL can access your app anywhere. Having a platform where all my projects sits and where I have full control of everything related to compute power is super exciting to me.

Example architecture of a simple instance. The virtual machine/instance lives in AWS (at a specific Avaliability Zone), you can access the instance via the internet but require a private key “to let you in”. The EBS volume in the diagram is essentially “virtual” drives that are not tied to any particular hardware, but are restricted to a particular EC2 availability zone. Taken from the docs.

In my day-to-day role, I get to spin up and down all these different instances (I.e. a virtual machine) on our non-production and tooling accounts every day. It is always amazing when I think about how quickly I can set up an instance as well as terminate it! I’m currently on my way to studying the AWS Cloud Practitioner Exam, using learnings from my job but also resources such as A Cloud Guru and AWS Newbies.

My most used services at the moment include EC2, DynamoDB and S3.

Infrastructure as Code

Now we get to the fun part! Infrastructure as code has been more fun to play with than I ever thought that it would be. 😂 The technical parts that I’ve using to create Amazon Machine Images (AMIs), build up stacks, environments, platforms etc include mostly Terraform, Packer and Ansible.

- Packer: an open source tool for creating identical machine images for multiple platforms from a single source configuration. Packer is lightweight, runs on every major operating system, and is highly performant, creating machine images for multiple platforms in parallel. I have used Packer to build Machine Images (AMIs) that is then picked up by Terraform that creates the instances.

A snippet example of Packer. Taken from the docs.

- Terraform: an open-source infrastructure as code software tool created by HashiCorp. It enables users to define and provision a datacenter infrastructure using a high-level configuration language known as Hashicorp Configuration Language, or optionally JSON. I have used Terraform to perform a stack apply which creates the infrastructure.

A snippet example of Terraform. Take from the docs.

- Ansible: an open-source software provisioning, configuration management, and application-deployment tool. It runs on many Unix-like systems, and can configure both Unix-like systems as well as Microsoft Windows. I have used Ansible to configure my Unix systems.

A snippet example of Anisble. Taken from the docs.

My current workflow on building an instance using infra as code

New tech: Docker and Kubernetes

These are both technologies that M has used on hosting my blog! I had briefly tried to understand what he was doing when we were re-building my blog and moving it over to his Kubernetes clusters. However, other than seeing him work his magic, I didn’t really understand it.

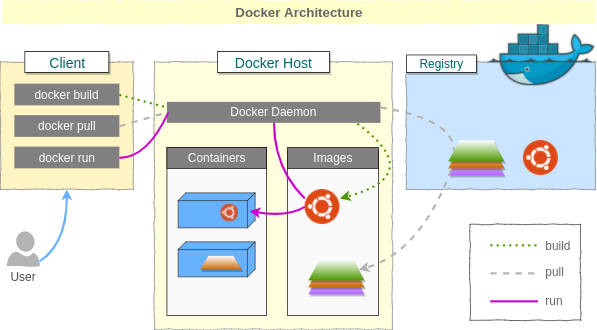

- Docker: is a set of platform-as-a-service products that use OS-level virtualization to deliver software in packages called containers. Essentially like a virtual machine that everyone can use and it should just work. This is because the containers contain everything that is needed for that particular delivery e.g. specific packages.

- Kubernetes: is an open-source container-orchestration system for automating application deployment, scaling, and management. From my understanding, it manages containerisation technologies such as Docker. Not delved into it in too much detail yet!

This resource is the best one there is out there: “The Illustrated Children’s Guide to Kubernetes” I’m quite a visual learner too, which means that yes, I always picture these characters whenever I think of Docker and Kubernetes 😂

I didn’t really understand Docker during my previous rotation in development. But now, in my new team, Docker is the containerisation technology that we are all moving towards and hopefully getting other teams to practice the same thing.

Over the last week or so, I’ve moved from doing all my work on my local machine to now working inside a Docker container. Some of my other team members have also been doing all their work on the same Docker container and guess what? It just works! This is helps stop the conversations of “it doesn’t work on my machine, but why does it work on yours?” I honestly didn’t understand the big deal, until I had blockers because of my local machine set-up but now… all that is gone! I am zen ASF 😎

Other things that I’ve learned:

- Some about Computer Networks – ports, DNS (I have also identified that this is my weakest area so let me know if you’ve got any good resources on learning this!)

- How Consul and Vault works and how we use it. Consul (storage) and Vault (storing of secrets)

- How to set-up a Virtual Machine on Virtual Box

- How to ssh into my Virtual Machine

- My way round Ubuntu, and some cool new Unix commands that make me 100% feel like a hacker

🌟 And it doesn’t stop there!

I’m learning so much day after day, I leave work with my brain a bit frazzled at times but it is so worth it when what I’m learning eventually starts to sink in.

I didn’t think that I would enjoy infrastructure but it really is so exciting! I’m looking forward to what else I can learn and pick up in the next few months…I’m sure that there will be a blog about that 😉